Understanding the Future: Demystifying Generative Artificial Intelligence Art and its Workings

I am certainly not an expert in artificial intelligence, but I do have some experiential knowledge about artificial intelligence artwork, which is the information I will share here. I know this subject has many facets, especially considering the legal, moral, and ethical practices around artificial intelligence, but what I am sharing here is based on my own practice. I acknowledge the vast potential for harm to be caused by AI. The use case presented here is not meant to debate that point but to clarify how the AI platforms I use generate images. Don’t worry. More blog posts are coming to continue to dive deep into this subject. This is just the primer.

What is artificial intelligence artwork?

The generative artificial intelligence programs I use are Internet-based software that surveys large data sets and, with machine learning, makes sense of that data. I work with various platforms, and all are trained on images from the internet, which are tagged with text-based descriptors.

Why would images on the internet be tagged with text-based descriptors?

One best practice for website design is to include text-based descriptions of any visual image on a website so that people who may need to use enhanced accessibility features can have a description of visuals read aloud to them. Think along the lines of a visually impaired person who cannot see the image clearly and can listen to a description of the image. Additionally, search engine optimization, SEO, ranks websites higher if images on the sites have metadata tagging visual images, often with keywords associated with the visual and page content. Therefore, many images on the web now have text directly linked to the image. These text-image pairings account for many of the datasets used to train AI. There are other text-image pairings in the datasets, but this is just meant to give a general understanding of what data is used, where it might come from, and what machine learning does with it.

How do text-based image descriptions help generative AI?

Generative artificial intelligence surveys these image-text pairs to analyze and learn essential characteristics. Let’s use the example of a lion. If a user prompts AI to create an image of a lion, the AI will survey its image-text pairs to discern the commonalities between all images paired with the text “lion.” The AI then reconfigures those commonalities to create a new image of a lion. Note that the new image is a reconfiguration of shared commonalities. It is not a collage of related images, nor is it a composite of those images, as these would have a finite number of variations that could be generated. Generative AI is debatably capable of producing an infinite number of variations for a prompt. Various AI models have varying sizes of datasets. Stable Diffusion had an initial training dataset of approximately 5 billion images. This seems like a neat little package, but there are some points of this logic that implode upon implementation. Here are a few examples.

The Marilyn Monroe Effect

There are a lot of images of Marilyn Monroe on the internet which are tagged with text–artwork, historical photographs, promotional images, etc. Her celebrity life was well documented. If you prompt an AI generator to create an image of Marilyn Monroe, the AI generator would have no problems discerning the essential characteristics of the defining term “Marilyn Monroe.” The algorithm would learn that Marilyn Monroe was a young white woman with short, curly blonde hair shown often with her eyes partially closed. Her lips are most often tinted deeply. It would learn her beauty mark, her bone structure, her facial symmetry, etc. Then it would produce an image of Marilyn Monroe based on those essential features. When you hit generate on that artificial intelligence software over and over and over again, you would get different, new images of Marilyn Monroe as the AI sort of rolls the dice with those characteristics. This works really great for pop culture icons like Marilyn Monroe, who have several photographs of them and especially if those photographs are very similar in nature. The drawback is that the AI encounters difficulty creating any representation of Marilyn Monroe that deviates too far from the Andy Warhol-esque depiction of Marilyn Monroe.

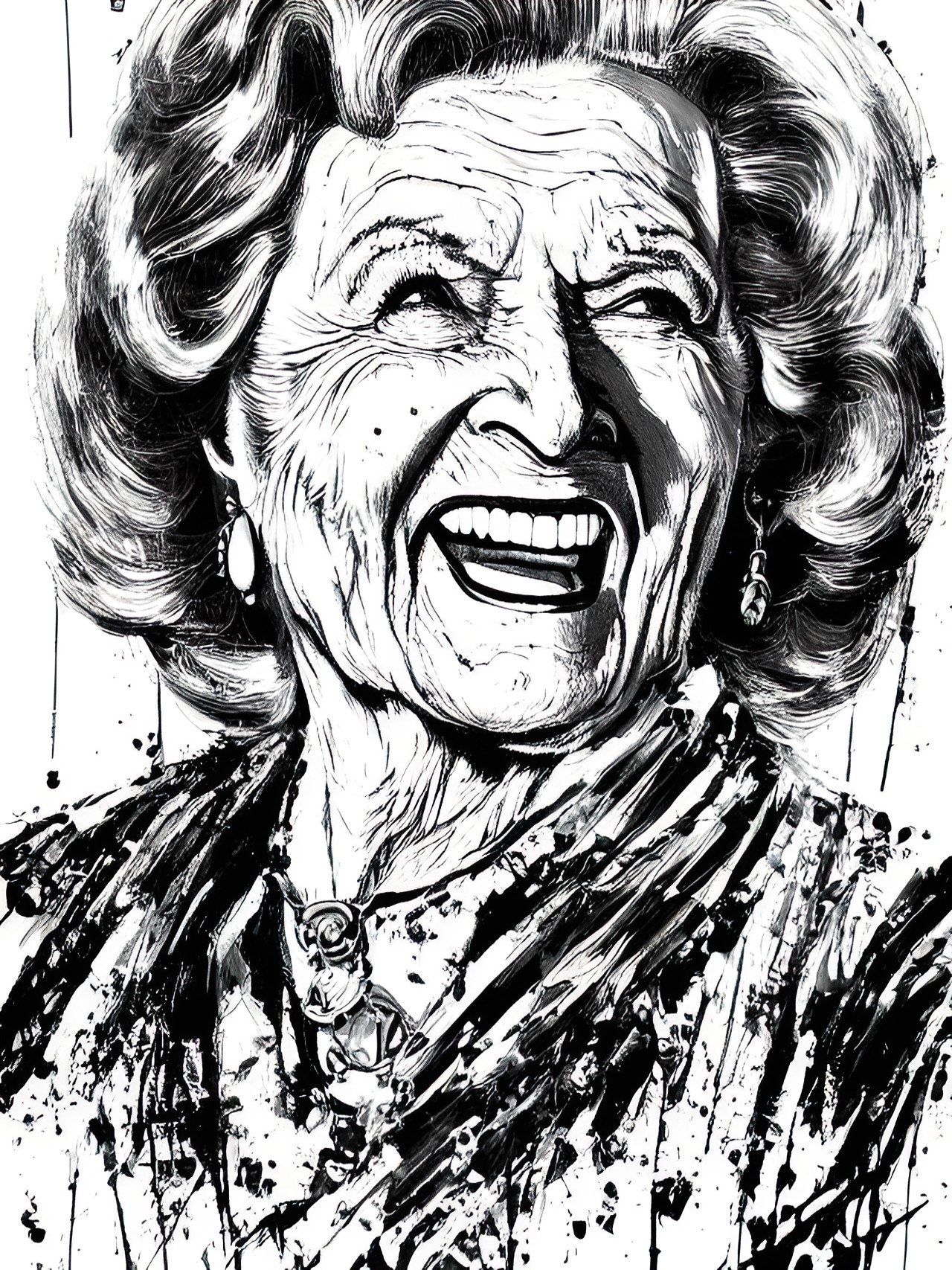

2. The Betty White Effect

Let’s consider another pop culture icon, Betty White. Again, Betty White has been a Hollywood icon since she was 27. Her life as an actress was well-documented from her twenties until she died at age 99. Those images are text tagged and live on the internet. So AI should be able to learn the characteristics of Betty White and produce images of her just as easily as Marilyn Monroe. It can but with limitations. Here’s why. The most prolific images that are text-paired to the term “Betty White” are from the present. Technological advances have made it easier to capture and publish images; therefore, the majority of the images of Betty White in the dataset are of an older Betty White. Good luck getting AI to produce a young Betty White even though those images are in the dataset, there are not enough of them to influence the end result, so Betty White always appears old because that dominates the dataset.

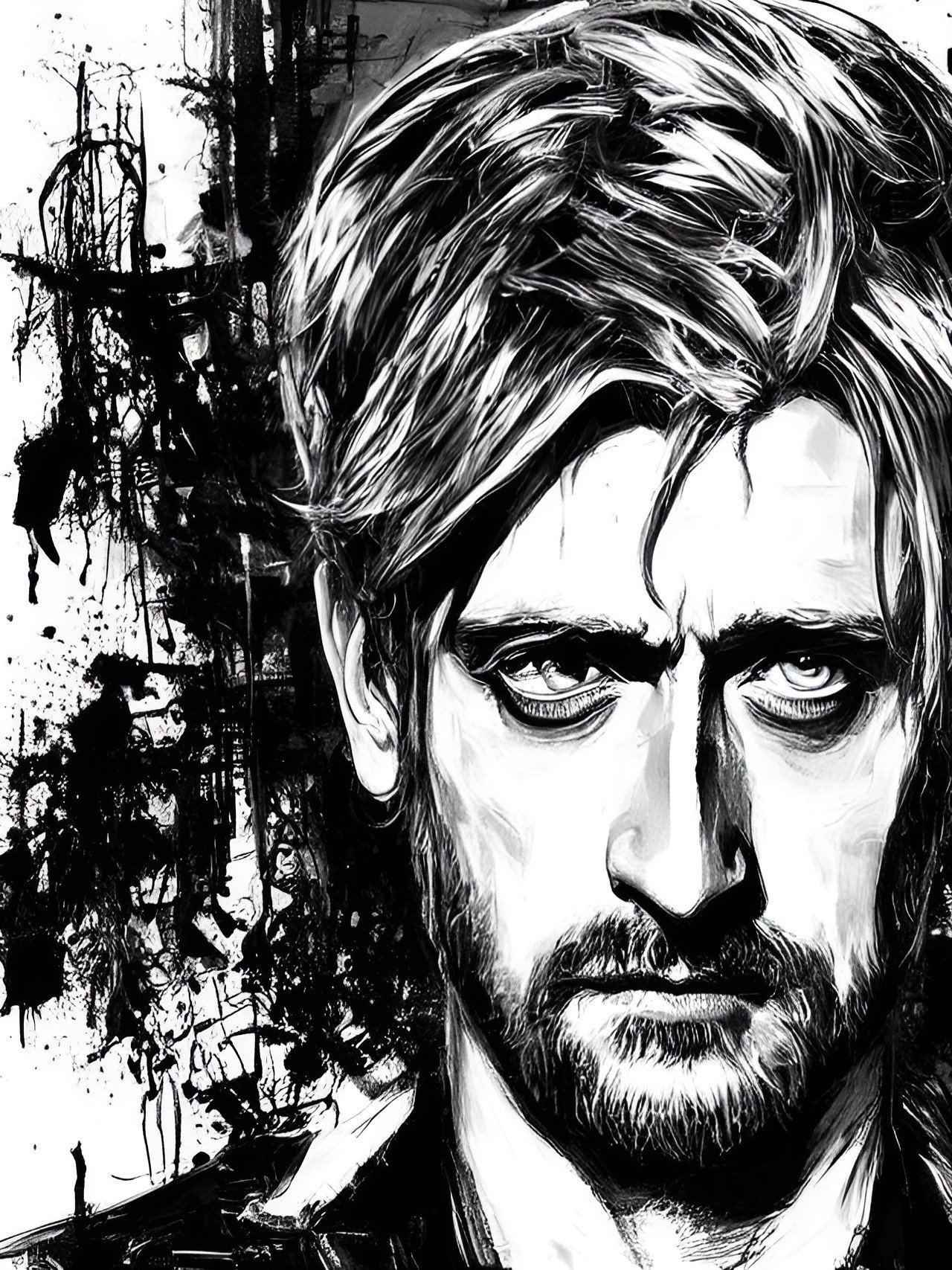

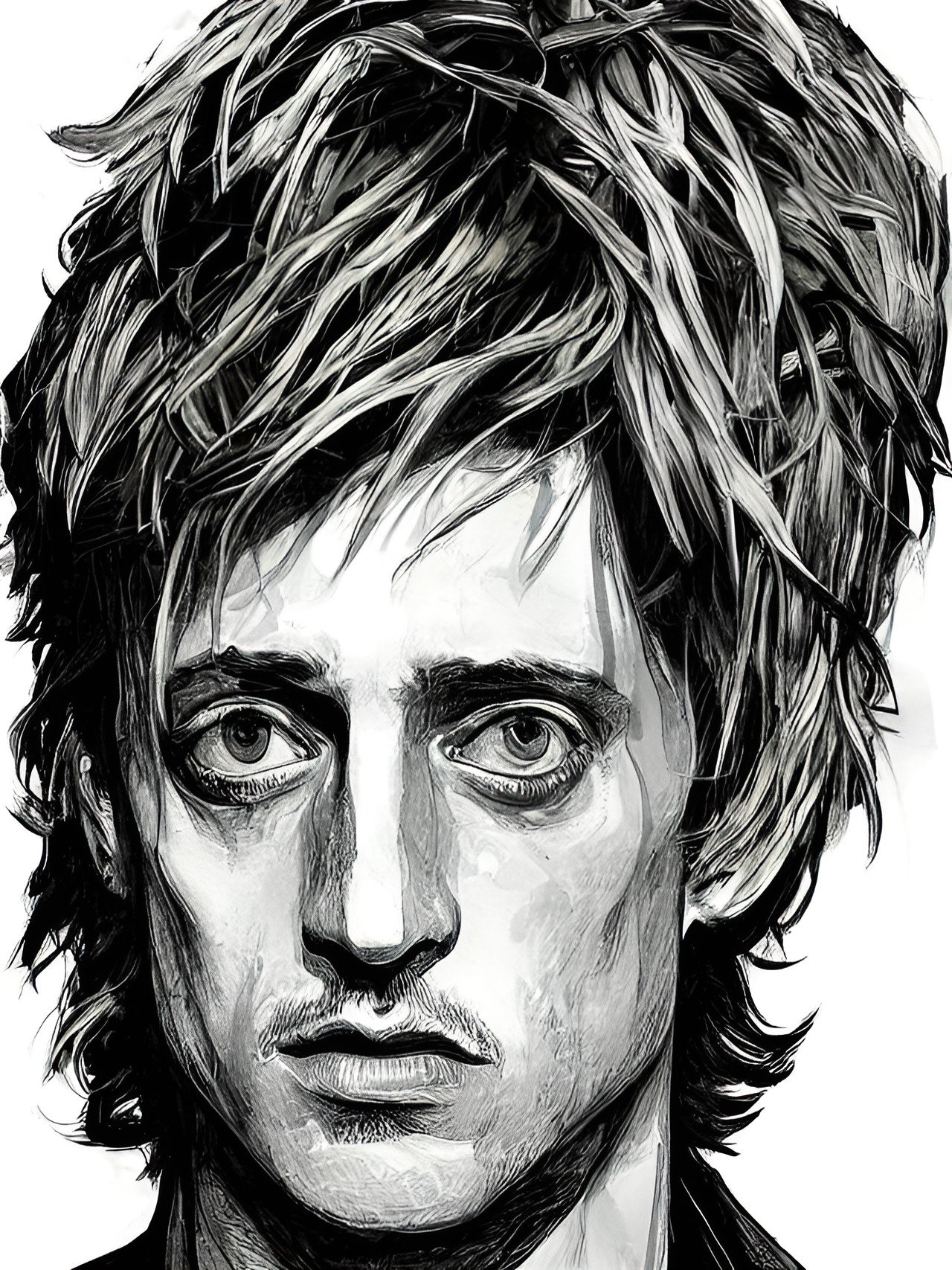

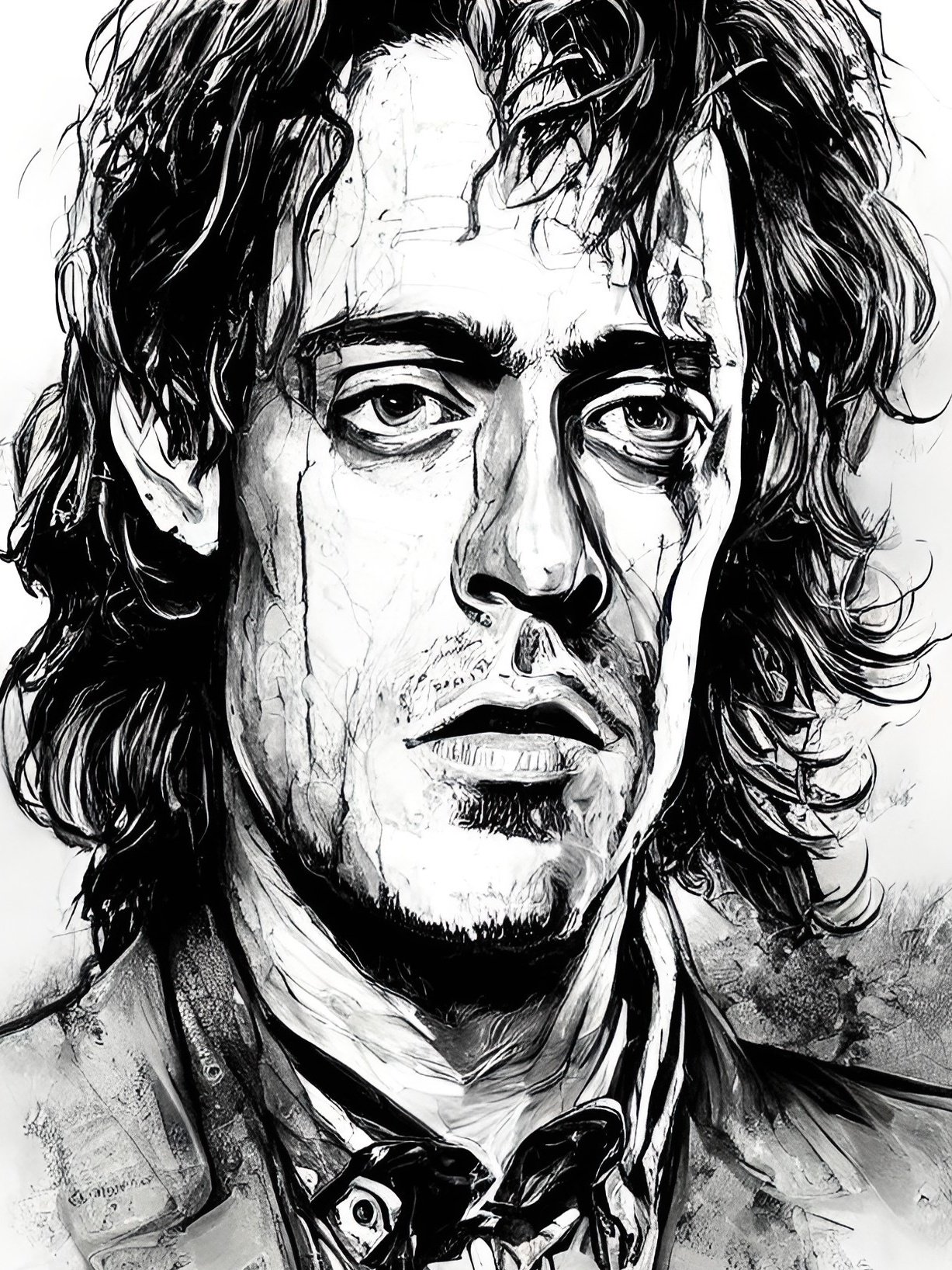

3. The Macaulay Culkin Effect

Considering other pop culture icons who have undergone dramatic changes in their appearance: Val Kilmer, Brendan Frazier, Marlon Brando, Madonna, Oprah Winfrey, Michael Jackson, etc. Method actors, people who have been “in the spotlight” for their entire lives, or people who have altered their appearance have multiple variations of their appearance appearing equally in the training dataset. The AI has difficulty discerning what the essential features are of such a person, and it is unable to produce images that appropriately represent such people.

4. The Nobody Effect

Similarly, AI cannot produce accurate images for people whose likeness is not well-documented or tagged in the dataset, at least for now, and this is probably a good thing. But it is a factor of the same issues listed above, so it is worth noting.

5. The Biases of the Internet

What is becoming apparent with generative image AI is since it has been trained on sets of images from the internet, it carries all the bias of the internet. Young white people are most often generated and are most often portrayed as attractive, while marginalized people are portrayed in negative ways (aggressive, poor, dirty, disheveled), or they are completely absent from generations unless specifically listed in the prompt. For example, in hundreds of thousands of prompt generations of women, all women randomly generated by the AI were Caucasian unless a different race was added to the prompt. There is a strong age bias towards images of younger people (ages 18 to 30 seem most common). Women often appear weak, battered, sad, or overly sexualized. Men appear fit, strong, serious, and domineering. All of this is the obvious conclusion given the training data set and bias of the internet, but it is notable nonetheless.

6. The Anatomy Problem

These factors of accurate text-image pairings and appropriate variations in the training datasets extend to inanimate objects and animals. How many legs does a table have? How many horns does a goat have? These are very basic essential characteristics of anatomy, but there are a lot of text-tagged images of tables and goats on the internet. Those images are from different perspectives and different angles. Sometimes tables have three legs or a pedestal base with no legs. Sometimes goats are depicted in pairs, in groups, or in herds. So when the computer analyzes these datasets for essential features, it can lose sight of some very basic specifics. This is exactly the problem the AI runs into when generating images of people that show hands or feet. Different software platforms are working on this issue in different ways, and some apps have been able to make big improvements here.

While generative AI art carries immense potential, it comes with its unique challenges. As this field continues to evolve, I am excited to see how AI and AI Artists work to make artwork more inclusive, diverse, and accurate. Like and share the post, and if you found this post particularly interesting and want to learn more about me, explore more about my AI art, or purchase one of my pieces, please visit my website, www.aliceabsolutelystudios.com.